I want my Data Warehouse databases in Azure DevOps just like my ETL projects and use Contunious Integration (CI) and Continuous Deliver (CD) for multiple environments. How do you do that?

|

| SQL Database in DevOps |

Solution

If you read a couple of forums and blogs about this subject then you will probably notice that 'all roads lead to Rome'. In this blog series we will explain our approach, but feel free to deviate.

Just for the record. With Continuous Integration (CI) we mean merging the code of all developers and building the project to check whether everything still works. Continuous Deliver will orchestrate the deployment of the package created by CI through out the DTAP environment in an automated manner to reduce costs, but speed up the release proces.

For this example we work with four environments each with its own Azure SQL database: Development, Test, Acceptance and Production. The Development database is a shared database where all developers do there coding.

The database changes since the last compare will be pushed to a Visual Studio Database project. Which on its turn will be committed to Git. After that the project will be build in DevOps and then deployed through all environments.

|

| DTAP environments |

Note: This approach with a single shared development database only works for smaller teams or when the database work can be separated to avoid conflicts. The alternative is to give each developer their own local development database. This will take a little more syncing and merging effort though.

1) Visual Studio Database project

First, you either need Visual Studio 2017 or 2019. You have three options:

- SSDT standalone installer. This is Visual Studio 2017 with only the BI and DB project templates.

- Visual Studio 2017 (Community Edition is free). During installation locate Data storage and processing and select SQL Server Data Tools. Finding a download without an MSDN license is a bit tricky since 2019 is the current version

- Visual Studio 2019 (Community Edition is free). During installation locate Data storage and processing and select SQL Server Data Tools.

|

| Data Storage and processing - SQL Server Data Tools |

After installing you will find the SQL Server Database Project under SQL Server when you create a new project.

|

| Create new Database Project |

2) Import or Schema compare

Now that we have an empty database project we need to add the database items from our development database to this new project. You can either do an import of the database or do a Schema Compare between the database and the project.

When importing a new database you only have couple of options like not importing referenced logins or the folder structure. I would suggest not to import any logins because the are probably different on all environments. As folder structure I recommend Schema\Object Type because I think this is the clearest structure. Note that you can do an import only once.

|

| Import Database |

The second option is to do a schema compare which gives you much more options for tuning the synchronization. The best feature is that you can repeat this any time you like to update the Visual Studio Project.

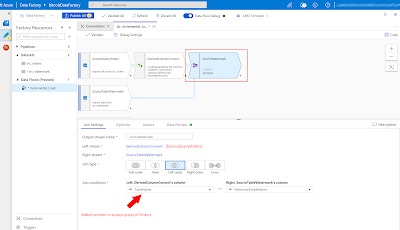

Right click the project and choose Schema Compare... Then make sure the source database is on the left side and the Visual Studio project on the right side. Click on the options/settings icon to tune the comparison. In this case we will ignore users and role memberships.

Now press the compare button and review all changes especially when you work in a team. Then you probably only want your changes. Uncheck changes that are not yours or not ready to go to the next environment. By clicking on a change you can see the actual differences. Last step is to press the Update button and move all selected changes to the Visual Studio project. Before committing any changes to Git you should built your project to check if everything works.

|

| Sql Schema Compare |

Note: When working with multiple developers in a single development database you will probably encounter a couple of database changes which where not made by you. This is the point where you have to be selective on which changes you want to push to the database project.

Tip: When you have a lot of 'garbage' in your development database you could do a clean up by switching the source and target in the Sql Schema Compare and then push the update button. This will throw everything away that is not in your database project. It is probably a good idea to first create a backup.

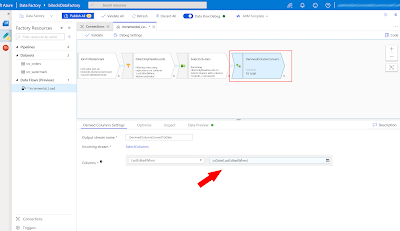

3) Add Schema Compare to project

A great feature of the Sql Schema Compare file is that you can save it to your PC and then add it to your database project. The next time you only have to open it and click on the compare button.

|

| Add Compare file to db project |

4) Dacpac to create or update

If you built the database project in Visual Studio or DevOps it will generate a .dacpac file which can be used to create or update the next database in our DTAP environment. By default it can only update tables when they get bigger (extra columns or larger datatypes). This is to prevent loss of data. In a next post we will explain how to overcome this with Publish profiles or Pre-Deployment script.

Conclusion

In this introduction post you learned how to create a database project in Visual Studio and how to synchronize a database and a database project. In the next posts we will create a Build pipeline in DevOps to validate your committed database changes and after that we will create a Release pipeline to release your committed database changes through the DTAP environment.

A downside of database projects in Visual Studio is that it can become very slow if you have like thousands and thousands of database objects. For those very large databases (not in size, but in structure) you could also take a look at commercial database tools like those of redgate or SentryOne. However the majority of Data Warehouse databases will probably easily fit in Visual Studio Database projects.