I'm deploying my SQL Server database via DevOps with the SqlAzureDacpacDeployment@1 task in YAML, but it is giving me a warning: ##[warning]The command 'Get-SpnAccessToken' is obsolete. Use Get-AccessTokenMSAL instead. This will be removed. It is still working, but the warning message is not very reassuring.

|

| The command 'Get-SpnAccessToken' is obsolete. Use Get-AccessTokenMSAL instead. This will be removed |

Solution

This warning message appeared somewhere late 2022 and there is no new version of the dacpac deployment task available at the moment. When searching for this message it appears that other tasks like AzureFileCopy@5 have the same issue. The word MSAL (Microsoft Authentication Library) in the message points to a new(er) way to acquire security tokens.

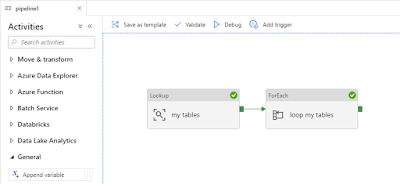

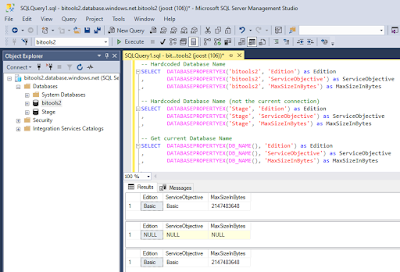

To get more info you could run the pipeline in debug modes by Enabling system diagnostics.

Then you will get see a lot of extra messages and right above the warning you will see a message about USE_MSAL (empty) and that its default value is false.

It is just a warning and Microsoft will probably solve it some day. If you want to get rid of it you can set an environment variable called USE_MSAL to true within your pipeline. When set to true the task will use MSAL instead of ADAL to obtain the authentication tokens from the Microsoft Identity Platform. The easiest way to do this is writing one line of PowerShell code in a PowerShell task: ##vso[task.setvariable variable=USE_MSAL]true

###################################

# USE_MSAL to avoid warning

###################################

- powershell: |

Write-Host "Setting USE_MSAL to true to force using MSAL instead of ADAL to obtain the authentication tokens."

Write-Host "##vso[task.setvariable variable=USE_MSAL]true"

displayName: '3 Set USE_MSAL to true'

###################################

# Deploy DacPac

###################################

- task: SqlAzureDacpacDeployment@1

displayName: '4 Deploy DacPac'

inputs:

azureSubscription: '${{ parameters.ServiceConnection }}'

AuthenticationType: 'servicePrincipal'

ServerName: '${{ parameters.SqlServerName }}.database.windows.net'

DatabaseName: '${{ parameters.SqlDatabaseName }}'

deployType: 'DacpacTask'

DeploymentAction: 'Publish'

DacpacFile: '$(Pipeline.Workspace)/SQL_Dacpac/SQL/${{ parameters.SqlProjectName }}/bin/debug/${{ parameters.SqlProjectName }}.dacpac'

PublishProfile: '$(Pipeline.Workspace)/SQL_Dacpac/SQL/${{ parameters.SqlProjectName }}/${{ parameters.SqlProjectName }}.publish.xml'

IpDetectionMethod: 'AutoDetect'

After this the warning will not appear anymore and your database will still get deployed. The extra step taks about a second to run.

|

| Extra PowerShell Task |

|

| No more absolete warnings |

Conclusion

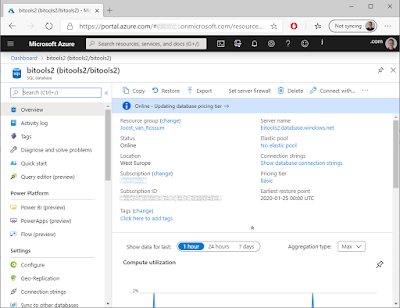

In this post you learned how to get rid of the annoying The command 'Get-SpnAccessToken' is obsolete warning by setting one environment variable to true. You should probably check in a few weeks/months whether this workaround is still necessary or if there is a SqlAzureDacpacDeployment@2 version.