Case

Azure Analysis Services has a new set of pricing tiers (Dev, B1, B2, S0, S1, S2, S3, S4, S8, S9) this makes it more useful to upscale and downscale to save money in Azure rather then pausing it completely. How do I do that?

Solution

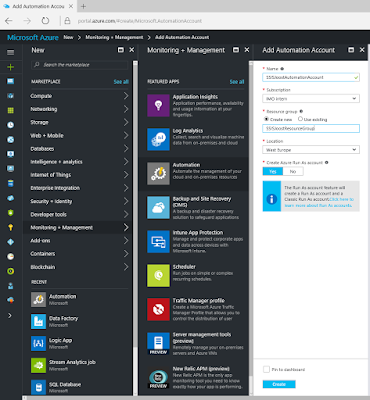

If you are using AAS for an environment that has fixed quiet hours then you can downgrade or upgrade the used tier with some PowerShell code in Azure Automation Runbooks. This could potentially save you a lot of money. If you are not using the system at all on certain hours then you could even pause (and resume) AAS to .1) Automation Account

First we need an Azure Automation Account to run the Runbook. If you don't have one or want to create a new one, then search for Automation under Monitoring + Management and give it a suitable name, then select your subscription, resource group and location. For this example I will choose West Europe since I'm from the Netherlands. Also make sure the Create Azure Run as account option is on (we need it for step 3).

|

| Azure Automation Account |

2) Credentials

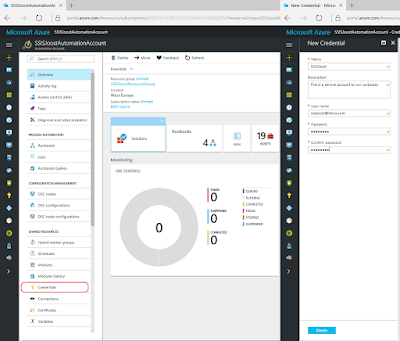

Next step is to create Credentials to run this runbook with. This works very similar to the Credentials in SQL Server Management Studio. Go to the Azure Automation Account and click on Credentials in the menu. Then click on Add New Credentials. You could just use your own Azure credentials, but the best options is to use a service account with a non-expiring password. Otherwise you need to change this regularly.

|

| Create new credentials |

3) Connections

This step is for your information only and to understand the code. Under Connections you will find a default connection named 'AzureRunAsConnection' that contains information about the Azure environment, like the tendant id and the subscription id. To prevent hardcoded values we will retrieve these fields in the PowerShell code.

|

| Azure Connections |

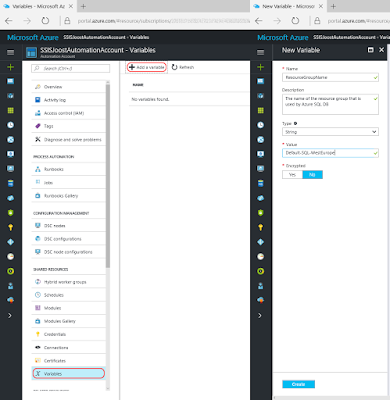

4) Variables

An other option to prevent hardcoded values in your PowerShell code it to use Variables. We will use this option to provide the Resource Group and the name of your Analysis Server. Go to Variables and add a new variable for ResourceGroupName and add the name of the Resource Group that is used by your AAS. Then repeat this for the name of your AAS (not the Server Name value that starts with asazure://) and call it AnalysisServerName.

|

| Add variables |

5) Modules

The Azure Analysis Services methods (cmdlets) are in a separate PowerShell module which is not included by default. If you do not add this module you will get errors telling you that the method is not recognized.

|

| The term 'Get-AzureRmAnalysisServicesServer' is not recognized as the name of a cmdlet, function, script file, or operable program. |

Go to the Modules page and check whether you see AzureRM.AnalysisServices in the list. If not then use the 'Browse gallery' button to add it, but first add AzureRM.Profile because the Analysis module will ask for it. Adding the modules could take a few minutes!

If you already have AzureRM.AnalysisServices then make sure it is at least version 0.4.0 (jun 8 2017), because the older versions have a little bug in it that doesn't allow you to change the tier.

|

| Add modules |

6) Runbooks

Now it is time to add a new Azure Runbook for the PowerShell code. Click on Runbooks and then add a new runbook (There are also four example runbooks of which AzureAutomationTutorialScript could be useful as an example). Give your new Runbook a suitable name and choose PowerShell as type.

|

| Add Azure Runbook |

7) Edit Script

After clicking Create in the previous step the editor will we open. When editing an existing Runbook you need to click on the Edit button to edit the code. You can copy and paste the code below to your editor. Study the green comments to understand the code. Also make sure to compare the variable names in the code to the once created in step 4 and change them if necessary.

|

| Edit the PowerShell code |

# PowerShell code # Don't continue in case of an error $ErrorActionPreference = "Stop" # Connect to a connection to get TenantId and SubscriptionId $Connection = Get-AutomationConnection -Name "AzureRunAsConnection" $TenantId = $Connection.TenantId $SubscriptionId = $Connection.SubscriptionId # Get the service principal credentials connected to the automation account. $null = $SPCredential = Get-AutomationPSCredential -Name "SSISJoost" # Login to Azure ($null is to prevent output, since Out-Null doesn't work in Azure) Write-Output "Login to Azure using automation account 'SSISJoost'." $null = Login-AzureRmAccount -TenantId $TenantId -SubscriptionId $SubscriptionId -Credential $SPCredential # Select the correct subscription Write-Output "Selecting subscription '$($SubscriptionId)'." $null = Select-AzureRmSubscription -SubscriptionID $SubscriptionId # Get variable values $ResourceGroupName = Get-AutomationVariable -Name 'ResourceGroupName' $AnalysisServerName = Get-AutomationVariable -Name 'AnalysisServerName' # Get old status (for testing/logging purpose only) $OldAsSetting = Get-AzureRmAnalysisServicesServer -ResourceGroupName $ResourceGroupName -Name $AnalysisServerName # changing tier Write-Output "Upgrade $($AnalysisServerName) to S1. Current tier: $($OldAsSetting.Sku.Name)" Set-AzureRmAnalysisServicesServer -ResourceGroupName $ResourceGroupName -Name $AnalysisServerName -Sku "S1" Write-Output "Done" # Get new status (for testing/logging purpose only) $NewAsSetting = Get-AzureRmAnalysisServicesServer -ResourceGroupName $ResourceGroupName -Name $AnalysisServerName Write-Output "New tier: $($NewAsSetting.Sku.Name)"

Note 1: This is a very basic script. No error handling has been added. Check the AzureAutomationTutorialScript for an example. Finetune it for you own needs.

Note 2: There are often two versions of an method like Get-AzureRmAnalysisServicesServer and Get-AzureAnalysisServicesServer. Always use the one with "Rm" in it (Resource Managed), because that one is for the new Azure portal. Without Rm is for the old/classic Azure portal.

Note 3: Because Azure Automation doesn't support Out-Null I used an other trick with the $null =. However the Write-Outputs are for testing purposes only. Nobody sees them when they are scheduled.

Note 4: You can upscale from basic (Bx) to standard (Sx), but you cannot downscale from standard to basic!

7) Testing

You can use the Test Pane menu option in the editor to test your PowerShell scripts. When clicking on Run it will first Queue the script before Starting it. Running takes a couple of minutes.

|

| Testing the script in the Test Pane |

8) Publish

When your script is ready, it is time to publish it. Above the editor click on the Publish button. Confirm overriding any previously published versions.

|

| Publish the Runbook |

And now that we have a working and published Azure Runbook, we need to schedule it. Click on Schedule to create a new schedule for your runbook. For the scale down script I created a schedule that runs every working day on 9:00PM (21:00) to scale down the machine. The scale up script could for example be scheduled on working days at 7:00AM. Now you need to hit the refresh button in the Analysis Services overview in Azure to see if it really works. It takes a few minutes to run, so don't worry too soon.

|

| Add Schedule |

Summary

In this post you saw how you can scale up/down your Azure Analysis Services instance to save some money in Azure during the quiet hours. The code and screenshot only shows a scale up from S0 to S1, but you could make a separate scale down script or parameterize the tier. An other option is to use a fancy if-construction that uses the current tier and/or time to decide whether you need to up scale or down scale.

Click here for more information about all Azure Analysis Services cmdlets that are included in the AzureRM.AnalysisServices module.