I want to send email notifications when my ETL process fails, but I don't want to create an extra Office365 account for just sending email notifications.

|

| Sending free emails in Azure |

Solution

Microsoft added the third party services SendGrid to the marketplace under 'Software as a Service (SaaS)' which allows you to send a massive number of 25000 emails a month for free. Hopefully more than enough for a couple of ETL failure notifications.

In this blog we will show you how to create a SendGrid account in Azure and then show you how to send email notifications via ADF/LogicApps/SendGrid. In a second post we will show you that you can also use this in an Azure Automation Runbook to send notifications about the up- or downscale and the pause or resume of services.

Part 1: Create SendGrid Account

1) Create new resource

The first step is to create a SendGrid resource which you can find in the Azure marketplace.

- Go to the Azure Portal and create a new resource

- Search in de Marketplace for SendGrid or find it under the topic Software as a Service (SaaS)

- Select SendGrid and then click on the Create button

- Give your new resource an useful name and a secure password

- Select the right subscription and Resource Group

- Choose the pricing tier you need (F1 is free) and optionally enter a Promotion Code if you expect to send millions of emails a month

- Fill out the contact details which will be send to SendGrid (Twilio) for support reasons

- Review (and accept) the legal terms

- Click on the create button

|

| Create Azure SendGrid account |

After these first steps a SendGrid resource will be created in Azure, but it will also create a SendGrid account at sendgrid.com. You will also receive an email to activate your account on sendgrid.com. Note that the pricing details can be changed in Azure, but all the other (non-azure) settings can only be edited on sendgrid.com.

2) Create API key

To send emails via SendGrid we first need the API key. This key can only be generated on the sendgrid.com website.

- Go to the Azure SendGrid resource and click on the Manage button on the overview page. This will redirect you to the sendgrid.com website

- Go to Settings in the left menu and collapse the sub menu items

- Go to the API Keys and click on the Create API Key button.

- Then enter a name for your API key, choose which permissions you need and click on the Create and View button.

- Copy and save this API key in a password manager. This is the only option you get to retrieve this API key

Part 2: Use SendGrid in LogicApps

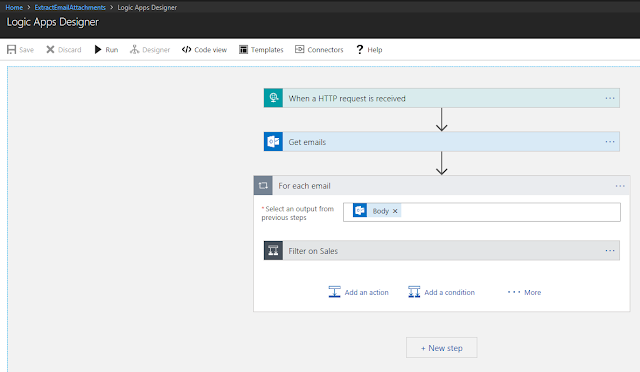

In this previous post we showed you how to send an email notification in Azure Data Factory (ADF) via LogicApps. That solution exists of two parts: ADF (error handling) and Logic Apps (send email). For this post we will only slightly change the Logic Apps part of that solution. Instead of using the Office 365 Outlook - Send an email action we will use the SendGrid - Send email action.

|

| Replace Office 365 Outlook by SendGrid |

The communication between these two Azure resources is done with a JSON message via an HTTP (post) request. The JSON message contains the name of the Data Factory and the pipeline that failed, an error message and a send to email address.

{

"properties": {

"DataFactoryName": {

"type": "string"

},

"PipelineName": {

"type": "string"

},

"ErrorMessage": {

"type": "string"

},

"EmailTo": {

"type": "string"

}

},

"type": "object"

}

1) Create new Logic App

Let's create an Azure Logic App that receives parameters from ADF and sends an email using these parameters.

- Click on Create a resource on the Azure portal, search for Logic App, select it and hit the Create button. You can also locate Logic App under Integration in the Azure Marketplace.

- Pick a descriptive name like "ADF-Notifications"

- Then select the Subscription, Resource Group and Location

- Now hit the Create button and wait for it to be generated

|

| Create new Logic App |

2) HTTP Trigger

Next step is to edit the newly created Logic App and to pick a trigger. This is the event that starts this Logic App to send emails. To call in from the ADF Web(hook) activity we need an HTTP trigger.

- Go to your new Logic App and edit it

- Pick the HTTP trigger When a HTTP request is received

- Edit the trigger and copy and paste the JSON message from above into the big text-area.

|

| Adding the HTTP trigger |

Adding the JSON message to the HTTP trigger will generate new variables for the next action that will be used to generate the email message.

3) Send an email

This next step deviates from the previous post and adds the SendGrid action to send emails. At the moment of writing there is a v3 and a v4 preview version. For important processes you probably should not choose the preview version.

- Add a New Step

- Search for SendGrid and select it

- Choose the Send email action

- Give the connection a name and paste the SendGrid API and click on Create

- The From-field is a hardcoded email address in this example

- The To-field comes from the variable 'EmailTo' generated by the JSON message in the HTTP trigger

- The Subject is a concatenation of the DFT name and the pipeline name

- Body comes from the 'ErrorMessage' variable

- Save the Logic App. The first save action will reveal the HTTP post URL.

Note that the top of the dynamic content window didn't appear on the screen. Collapsing the HTTP trigger above did the trick.

|

| Adding SendGrid - Send email action |

Tip: keep the message setup dynamic with variables which allows you to use it for all your ADF pipelines.

4) Copy URL from HTTP trigger

The Logic App is ready. Click on the HTTP trigger and copy the URL. We need this in ADF to trigger the Logic App.

|

| Copy the URL for ADF |

Part 3: Setup Data Factory

Next step is adding a Web activity in the ADF pipeline to call the Logic App from above that sends the email. Since nothing changed on the ADF part of the solution since last time, you can continue for that ADF part on our previous post.

Summary

In this post we showed you how to use SendGrid in LogicApps to send you an email notification in case of a failing pipeline in Azure Data Factory. This saves you a daily login to the Azure portal to check the pipelines monitor. An additional advantage of using SendGrid above an Office 365 account like in the previous post is that it is free as long as you not send more than 25000 emails a month and you don't have to ask the administrator for an Office 365 account.

In a second blog post about SendGrid we will show you how to use it in an Azure Automation Runbook. This allows you to send notifications with PowerShell when for example your runbook fails.