We sometimes mess up when creating pull requests in Azure DevOps by accidentally selecting the wrong source or target branch. These mistakes caused unwantend situations where stuff got promoted to the wrong environment to early. Resolving those mistakes often take a lot of time. Is there a way to prevent these easily made mistakes?

|

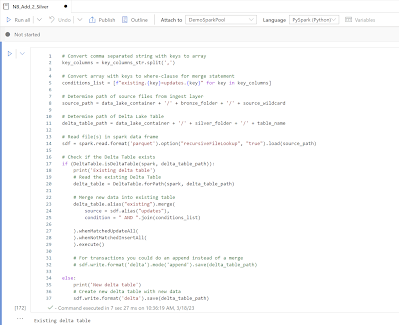

| Build Validation for Pull Requests |

Solution

The first option is to setup a four-eyes principle where someone else has to approve your work including choosing the right branches for your pull request. This can be done by setting the branch policies. Note that you need to do this for all your branches (excluding feature/personal branches).

Onother option that you could use is build validations where you compare the source branch with the target branch in a script. Even better is to combine these two options, but if you (sometimes) work alone then this could be an alternative.

Note that this solution only works if you have at lease two branches and that you are also using feature branches.

1) Create extra YAML file

Add an extra YAML file called ValidatePullRequest.yml in your your current YAML folder.

The code of the new file can be found below, but you have to change two things in this code. The first thing is the names from you branches in order (on line 14). If you only have a main and a development branch then it will be [String[]]$branches = "main", "development". The second item is the name/wildcard of hotfix/bugfix branches that will need to be ignored by this check (on line 17). So if your bugfix branches always contain the word hotfix then it will become [String]$fixBranch = "hotfix". The script uses a like to compare the name.

trigger: none

steps:

- task: PowerShell@2

displayName: 'Validate branches in Pull Request'

inputs:

targetType: 'inline'

script: |

#######################################################################

# PARAMETERS FOR SCRIPT

#######################################################################

# All branches in order. You can only do a pull request one up or down.

# Feature and / or personal branches can only be pulled to or from the

# latest branch in the row.

[String[]]$branches = "main", "acceptance", "test", "development", "sprint"

# Bugfix or hotfix branches can be pulled to and from all other branches

[String]$fixBranch = "bugfix"

#######################################################################

# DO NOT CHANGE CODE BELOW

#######################################################################

$SourceBranchName = "$(System.PullRequest.SourceBranch)".toLower().Replace("refs/heads/", "") # sourceBranchName does not exist

$TargetBranchName = "$(System.PullRequest.targetBranchName)"

function getBranchNumber

{

<#

.SYNOPSIS

Get the order number of the branch by looping through all branches and checking then branchname

.PARAMETER BranchName

Name of the branch you want to check

.EXAMPLE

getBranchNumber -BranchName "myBranch"

#>

param (

[string]$BranchName

)

# Loop through branches array to find a specific branchname

for ($i = 0; $i -lt $branches.count; $i++)

{

# Find specific branchname

if ($branches[$i] -eq $BranchName)

{

# Return branch order number

# (one-based instead if zero-based)

return $i + 1

}

}

# Unknown branch = feature branch

return $branches.count + 1

}

# Retrieve branch order

$SourceBranchId = getBranchNumber($SourceBranchName)

$TargetBranchId = getBranchNumber($TargetBranchName)

# Show extra information to check the outcome of the check below

Write-Host "All branches in order: [$($branches -join "] <-> [")] <-> [feature branches]."

Write-Host "Checking pull request from $($SourceBranchName) [$($SourceBranchId)] to $($TargetBranchName) [$($TargetBranchId)]."

if ($SourceBranchName -like "*$($fixBranch)*")

{

# Pull request for bugbix branches are unrestricted

Write-Host "Pull request for Bugfix or hotfix branches are unrestricted."

exit 0

}

elseif ([math]::abs($SourceBranchId-$TargetBranchId) -le 1)

{

# Not skipping branches or going from feature branch to feature branch

Write-Host "Pull request is valid."

exit 0

}

else

{

# Invallid pull request that skips one or more branches

Write-Host "##vso[task.logissue type=error]Pull request is invalid. Skipping branches is not allowed."

exit 1

}

In this GitHub repository you will find the latest version of the code

Note 1: The PowerShell script within the YAML either returns 0 (success) of 1 (failure) and that the Write-Host contains a little ##vso block. This allows you to write errors that will show up in the logging of DevOps.

Note 2: The script is fairly flexible in the number of branches that you want use, but it does require a standard order of branches. All unknown branches are considered being feature branches. If you have a naming conventions for feature branches then you could refine the IF statement to also validate those naming conventions to annoy/educate your co-workers even more.

Now create a new pipeline based on the newly created YAML file from step 1.

- Go to Pipelines in Azure DevOps.

- Click on the New Pipeline button to create the new pipeline.

- Choose the repos type (Azure DevOps Git in our example)

- Select the right repositiory if you have mulitple repos

- Select Existing Azure Pipelines YAML file.

- Select the branch and then the new YAML file under Path.

- Save it

- Optionally rename the Pipeline name to give it a more readable name (often the repository name will be used as a default name)

3) Add Build Validation to branch

Now that we have the new YAML pipeline, we can use it as a Build Validation. The example shows how to add them in an Azure DevOps repository.

- In DevOps go to Repos in the left menu.

- Then click branches to get all branches.

- Now hover you mouse above the first branch and click on the 3 vertical dots.

- Click Branch policies

- Click on the + button in the Build Validation section.

- Select the new pipeline created in step 2 (optionally change the Display name)

- Click on the Save button

Repeat these steps for all branches where you need the extra check. Don't add them on feature branches because it will also prevent you doing manual changes in these branches.

4) Testing

Now its time to perform a 'valid' and an 'invalid' pull request. You will see that the validation will first be queued. So this extra validation will take a little extra time, especially when you have a busy agent. However you can just continue working and wait for the approval or even press Set auto-complete to automatically complete the Pull Request when all approvals and validations are validated.

If the validation fails you will see the error message and then you can click on it to also find the two regular Write-Host lines (60 and 61) with the list of branches and the branch names with there branch order number. This should help you to figure out what mistake your made.

For the 'legal' pull request you will first see the validation being queued and then you will see the green circle with the check mark. You can click on it to see why this was a valid Pull request.

Conclusions

In this post you learned how to create Build Validations for your Pull Requests in Azure DevOps. In this case a simple script to compare the Source and Target branch from a Pull Request. In a second example, coming online soon, we will validate the naming conventions within a Synapse Workspace (or ADF) during a Pull Request. This will prevent your colleagues (you are of course always following the rules) from not following the naming conventions because the Pull Request will automatically fail.

Special thanks to colleague Joan Zandijk for creating the initial version of the YAML and Powershell script for the branch check.