Can I use a Logic App to execute an SSIS package located in the Azure Integration Runtime environment (ADF V2)? Logic App has several triggers that could be interesting as a start moment to execute an SSIS package. For example when a new file is added in a Blob Storage Container, a DropBox, a OneDrive or an (S)FTP folder. How do I do that?

|

| Logic App |

Solution

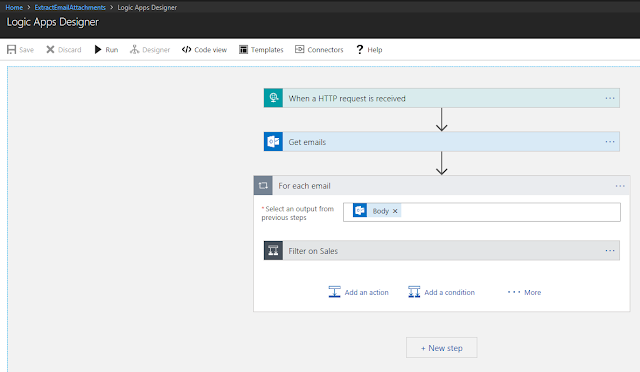

If you want to trigger something to start an SSIS package instead of scheduling it in ADF, then Logic App could be very handy. Of course you can accomplish the same in SSIS with some custom code or perhaps a Third Party component, but Logic App is much easier and probably cheaper as well.

For this example we will start an existing package when a new file is added to a certain Azure Blob Storage container. The Blob Storage Container and the SSIS package in the Integration Runtime environment already exist.

1) Logic App

Create a new Logic App by clicking on the + sign (Create new resource) on the azure portal. It is located under Integration. Give it a descriptive name like "ExecuteSsisWhenBlobFileIsCreated". After the creation of the Logic App choose the Blank Logic App as template to start.

|

| Create new Logic App |

2) Azure Blob Storage trigger

For this example we will be using a trigger on a Azure Blob Storage container: "When a blob is added or modified (properties only) (Preview)". Search for Blob and you will find the right trigger. Create a connection to the right Azure Blob Storage (if you already have created one within the same resource group then that one will be reused). Select the correct container and set the interval to your own needs.

|

| Logic App Blob Storage Trigger |

3) SQL Server Execute Query

The next step is to create SQL code that executes our package(s). You can easily create the SQL for this in SSMS. Go to your package in the Catalog. Right click it and choose Execute... Now set all options like Logging Level, Environment and 32/64bit. After setting all options hit the Script button instead of the Ok button. This is the code you want to use. You can finetune it with some code to check whether the package finished successfully.

|

| Generating code in SSMS |

The code below was generated and finetuned. Copy the code below (or use your own code) to use it in the next step.

-- Variables for execution and error message DECLARE @execution_id bigint, @err_msg NVARCHAR(150) -- Create execution and fill @execution_id variable EXEC [SSISDB].[catalog].[create_execution] @package_name=N'Package.dtsx', @execution_id=@execution_id OUTPUT, @folder_name=N'SSISJoost', @project_name=N'MyAzureProject', @use32bitruntime=False, @reference_id=Null, @useanyworker=True, @runinscaleout=True -- Set logging level: 0=None, 1=Basic, 2=Performance, 3=Verbose EXEC [SSISDB].[catalog].[set_execution_parameter_value] @execution_id, @object_type=50, @parameter_name=N'LOGGING_LEVEL', @parameter_value=1 -- Set synchonized option 0=A-SYNCHRONIZED, 1=SYNCHRONIZED -- A-SYNCHRONIZED: don't wait for the result EXEC [SSISDB].[catalog].[set_execution_parameter_value] @execution_id, @object_type=50, @parameter_name=N'SYNCHRONIZED', @parameter_value=1 -- Execute the package with parameters from above EXEC [SSISDB].[catalog].[start_execution] @execution_id, @retry_count=0 -- Check if the package executed succesfully (only for SYNCHRONIZED execution) IF(SELECT [status] FROM [SSISDB].[catalog].[executions] WHERE execution_id=@execution_id)<>7 BEGIN SET @err_msg=N'Your package execution did not succeed for execution ID: ' + CAST(@execution_id AS NVARCHAR(20)) RAISERROR(@err_msg,15,1) END

Now back to the Logic App. Add a new action called SQL Server Execute Query (not stored procedure) and create a connection to the SSISDB where your packages are located. Paste your code to execute your package in the query field of this new action.

|

| Action SQL Server Execute Query |

Note: When creating your SSISDB in ADF, make sure the option 'Allow Azure services to access' is turned on.

4) Testing

Save your Logic App and add a new file to the selected Blob Storage Container. Then watch the Runs history of the Logic App and the Execution Report in the Integration Services Catalog to view the result.

Summary

This post explains how to execute an SSIS package with a trigger in Logic App instead of scheduling it in Azure Data Factory. If you are using the Stored Procedure Activity in ADF to execute SSIS packages then you can reuse this code. In a next post we will see an alternative for Logic App.

Note: steps to turn your Integration Runtime off or on can be added with an Azure Automation action.