I'm using the new and improved ARM export via Npm to generated and ARM template for my Data Factory so I can deploy it to the next environment, but the Validate step and the Validate and Generate ARM template step both throw an error sayin that the arm-template-parameters-definition.json file can't be found. This file isn't mentioned in the steps from the documentation. How do I add this file and what content should be in it?

|

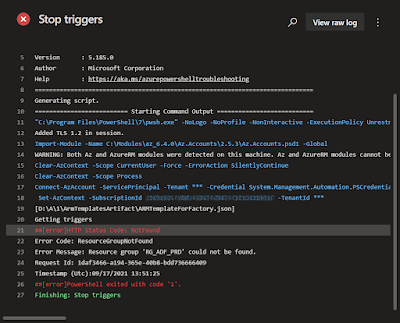

| Unable to read file: arm-template-parameters-definition.json |

ERROR === LocalFileClientService: Unable to read file: D:\a\1\arm-template-parameters-definition.json, error: {"stack":"Error: ENOENT: no such file or directory, open 'D:\\a\\1\\arm-template-parameters-definition.json'","message":"ENOENT: no such file or directory, open 'D:\\a\\1\\arm-template-parameters-definition.json'","errno":-4058,"code":"ENOENT","syscall":"open","path":"D:\\a\\1\\arm-template-parameters-definition.json"}

WARNING === ArmTemplateUtils: _getUserParameterDefinitionJson - Unable to load custom param file from repo, will use default file. Error: {"stack":"Error: ENOENT: no such file or directory, open 'D:\\a\\1\\arm-template-parameters-definition.json'","message":"ENOENT: no such file or directory, open 'D:\\a\\1\\arm-template-parameters-definition.json'","errno":-4058,"code":"ENOENT","syscall":"open","path":"D:\\a\\1\\arm-template-parameters-definition.json"}

Solution

This step is indeed not mentioned within that new documentation, but it can be found if you know where to look for. The messages state that it is indeed an error, but it will continue using a default file. Very annoying, but not blocking for your pipeline. To solve it we need to follow these steps:

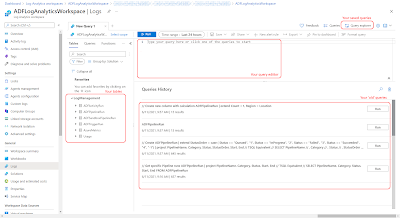

1) Edit Parameter configuration

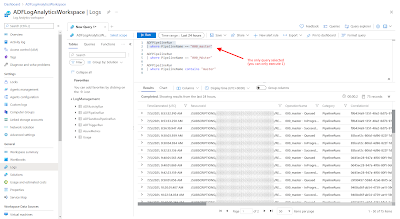

Go to your development ADF and open Azure Data Factory Studio. In the left menu click on the Manage icon (a toolbox) and then click on ARM template under Source Control. Now you will see the option 'Edit parameter configuration'. Click on it.

|

| Edit parameter configuration |

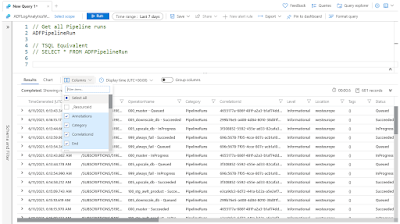

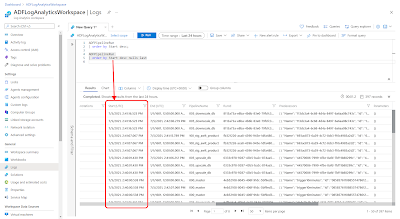

2) Save Parameter configuration

Now a new JSON file will be opened (that you can adjust to your needs, but more on that in a later post) and in the Name box above you will see 'arm-template-parameters-definition.json'. Click on the OK button and go to the Azure DevOps repository.

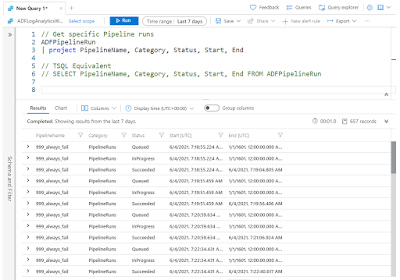

3) The result

In the Azure DevOps Repository you will now find a new file in the root of the ADF folder where the subfolders like pipeline and dataset are also located. Run the DevOps pipeline again and you will notice that the error and warning are gone.

|

| arm-template-parameters-definition.json |

3) The result

In the Azure DevOps Repository you will now find a new file in the root of the ADF folder where the subfolders like pipeline and dataset are also located. Run the DevOps pipeline again and you will notice that the error and warning are gone.

|

| The new file had been added to the repository by ADF |

Note: that you only have to do this for the development Data Factory (not for test, acceptance or production) and that the ARM template parameter configuration is only available for git enabled data factories.

Conclusion

In this post you learned how to solve the arm-template-parameters-definition.json not found error/warning. Next step is to learn more about this possibility and the use case of it. Most often it will be used to add extra parameters for options that aren't parameterized. This will be explained in a next post.

Conclusion

In this post you learned how to solve the arm-template-parameters-definition.json not found error/warning. Next step is to learn more about this possibility and the use case of it. Most often it will be used to add extra parameters for options that aren't parameterized. This will be explained in a next post.

In an other following post we will describe the entire Data Factory ARM deployment where you don't need to hit that annoying Publish button within the Data Factory GUI. Everything (CI and CD) will be a YAML pipeline).

thx to colleague Roelof Jonkers for helping