I am using a gallery on my overview page to see all records and on my details page I want a button to go to the next item from my gallery without first going back to my overview page.

|

| Generated App with dynamic Next button |

Solution

For this example we will use a generated PowerApp based on a product table that came from the AdventureWorks database.

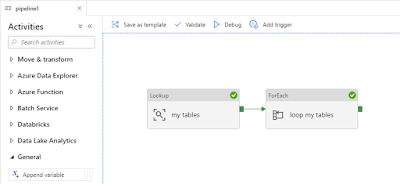

In this generated app you know which item is selected in the product gallery, but you don't know which product is next because there is no expression for this. To solve this we will:

- Create a new collection based on the gallery with only the key column (to keep it small).

- Then create a copy of that collection with an extra column that contains an auto-increment (auto-number).

|

| A preview of both collections |

1) Pass through product key via variable

First step is to slightly change the navigation command on the overview page. We will pass on a variable that contains the active product key to the details page. In the OnSelect action of the gallery we will change the existing code with an extra line:

// Old code

Navigate(

DetailScreen1,

ScreenTransition.None

)

// New code (with the variable)

Navigate(

DetailScreen1,

ScreenTransition.None,

{MyProductKey: BrowseGallery1.Selected.ProductKey}

)

|

| Change navigate in OnSelect of gallery |

2) Change item code on details page

On the details page where you see all columns/attributes of the product we need to change the Item code of the Display form. The standard code retrieves the selected item from the gallery on the overview page, but we will use the variable from step 1 instead.

// Old code

BrowseGallery1.Selected

// New code

// Get first row from dataset 'MyProducts'

// that is filtered with our new variable

First(

Filter(

'[dbo].[MyProducts]',

ProductKey = MyProductKey

)

)

|

| Change Item from Display form |

3) Add Next button

The last part of this solution is to add a button and a lot of code for its OnSelect action. Adding the rownumber to the collection can be done in a couple of ways, but this flexible code is very well documented by powerappsguide.com.

// Create new collection with only the key column

// from the gallery (key=ProductKey)

ClearCollect(

MyProductGallery,

ShowColumns(

BrowseGallery1.AllItems,

"ProductKey"

)

);

// Create new collection based on first

// collection with one extra column that

// contains an auto increment number "MyId"

Clear(MyNumberedProductGallery);

ForAll(

MyProductGallery,

Collect(

MyNumberedProductGallery,

Last(

FirstN(

AddColumns(

MyProductGallery,

"MyId",

CountRows(MyNumberedProductGallery) + 1

),

CountRows(MyNumberedProductGallery) + 1

)

)

)

);

// Retrieve next item from gallery and

// store its id (ProductKey) in a variable

// called 'MyNextProductKey'

// Steps:

// 1) use LookUp to retrieve the new id (MyId) from the new collection

// 2) use that number to only get records with a higher number by using a Filter

// 3) get First record from the filtered collection

// 4) get ProductKey column and store it in a variable

UpdateContext(

{

MyNextProductKey: First(

Filter(

MyNumberedProductGallery,

MyId > LookUp(

MyNumberedProductGallery,

ProductKey = MyProductKey,

MyId

)

)

).ProductKey

}

);

// Only when MyNextProductKey is not empty

// change the value of MyProductKey which

// will result in retrieving new data to

// the Display form.

// An empty value only occurs for the last

// record. The If statement could be extended

// with for example navigating back to the

// overview page when it is empty:

// Navigate(BrowseScreen1, ScreenTransition.None)

If (

!IsBlank(MyNextProductKey),

UpdateContext({MyProductKey: MyNextProductKey})

)

|

| Adding a next button to the details screen |

For adding a previous button you just need to change the filter to 'smaller then' and then select the last record instead of the first.

UpdateContext(

{

MyPrevProductKey: Last(

Filter(

MyNumberedProductGallery,

MyId < LookUp(

MyNumberedProductGallery,

ProductKey = MyProductKey,

MyId

)

)

).ProductKey

}

);

4) The Result

Now lets start the app add test the Next button. The nice part is that you can filter or sort the gallery anyway you like and the next button will still go to the next record from that gallery.

|

| Test the new next button |

Summary

In this post you learned how to retrieve the next (and previous) item from a gallery. You could also add this code to a save button to create a save-and-edit-next-record action which saves the user a few extra clicks by not going back to the overview page to find the next record to edit.

For an approve and go to next record button where your gallery is getting smaller and smaller each time you approve a record you could also do something very simple in your form to get next item

// Old code BrowseGallery1.Selected // Alternative code First(BrowseGallery1.AllItems)