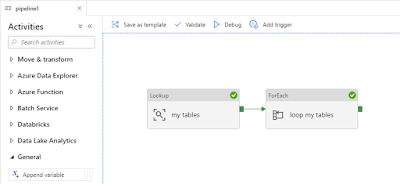

There are several methods to process Azure Analysis Services models like with Logic Apps, Azure Automation Runbooks and even SSIS, but is there an Azure Data Factory-only solution where we only use the pipeline activities from ADF?

|

| Process Azure Analysis Services |

Solution

Yes you can use the Web Activity to call the Rest API of Azure Analysis Services (AAS), but that requires you to give ADF permissions in AAS via its Managed Service Identity (MSI).

1) Create ADF service principal

In the next step we need a user which we can add as a Server Administrator of AAS. Since we will not find the managed identity of ADF when we search for a user account, we will have to create one. This 'user' is called a service principal.

- Go to ADF in the Azure portal (not the Author & Monitor environment)

- In the left menu click on Properties which you can find under General

- Copy the 'Managed Identity Application ID' and the 'Managed Identity Tenant' properties to a notepad and construct the following string for the next step:

app:<Application ID>@<Tentant> (and replace the <xxx> values with the properties)

app:653ca9f9-855c-45df-bfff-3e7718159295@d903b4cb-ac8c-4e31-964c-e630a3a0c05e

|

| Create app user from ADF for AAS |

2) Add user as Server Administrator

Now we need to connect to your Azure Analysis Services via SQL Server Management Studio (SSMS) to add the user from the previous step as a Server Administrator. This cannot be done via the Azure portal.

- Login to your AAS with SSMS

- Right click your server and choose Properties

- Go to the Security pane

- Click on the Add... button

- Add the service principal from the previous step via the Manual Entry textbox and click on the Add button

- Click on Ok the close the property window

|

| Add Server Administrator via Manual Entry |

After this step the 'user' will appear on the portal as well, but you can not add it via the portal.

|

| Analysis Services Admins |

3) Add Web Activity

In your ADF pipeline you need to add a Web Activity to call the Rest API of Analysis Services. First step is to determine the Rest API URL. Replace in the string below, the <xxx> values with the region, servername and modelname of your Analysis Services. The Rest API method we will be using is 'refreshes':

https://<region>.asazure.windows.net/servers/<servername>/models/<modelname>/refreshes

Example:

https://westeurope.asazure.windows.net/servers/bitoolsserver/models/bitools/refreshes

Second step is to create a JSON message for the Rest API to give the process order to AAS. To full process the entire model you can use this message:

{

"Type": "Full",

"CommitMode": "transactional",

"MaxParallelism": 2,

"RetryCount": 2,

"Objects": []

}

Or you can process particular tables within the model with a message like this:{

"Type": "Full",

"CommitMode": "transactional",

"MaxParallelism": 2,

"RetryCount": 2,

"Objects": [

{

"table": "DimProduct",

"partition": "CurrentYear"

},

{

"table": "DimDepartment"

}

]

}

See the documentation for all the parameters that you can use.

- Add the Web activity to your pipeline

- Give it a descriptive name like Process Model

- Go to the Settings tab

- Use the Rest API URL from above in the URL property

- Choose POST as Method

- Add the JSON message from above in the Body property

- Under advanced choose MSI as Authentication method

- Add 'https://*.asazure.windows.net' in the Resource property (note this URL is different for suspending and resuming AAS)

|

| Web Activity calling the AAS Rest API |

|

| Then Debug the Pipeline to check the process result |

4) Retrieve refreshes

By only changing the method type from POST to GET (body property will disappear) you can retrieve information about the processing status and use that information in the next pipeline activities.

|

| Retrieve process status via GET |

Summary

In this post you learned how process your Analysis Services models with only Azure Data Factory. No other services are needed which makes maintenance a little easier. In a next post we will also show you how to Pause or Resume your Analysis Services with Rest API. With a few extra steps you can also use this method to refresh a Power BI dataset, but we will show that in a future post.

Update: firewall turned on?