I want to see which files and folders are available in my workspace on a DevOps agent. This would make it much easier to determine paths to for example other YAML files or PowerShell scripts. Is there an option to browse files on the agent?

|

| Treeview after a checkout of the respository |

Solution

Unless you are using your own Virtual Machine as a private agent (instead of a Microsoft-hosted agent) where you can login to the actual VM, the answer is no. However with a single line PowerShell script it is very easy. Don't worry it's just copy and paste!

The trick is to add a YAML PowerShell task with an inline script that executes the PowerShell Tree command. The first parameter of the Tree command is the folder or drive. This is where the Predefined DevOps variables are very handy. For this example we will use the Pipeline.Workspace to see its content. The /F parameter will show all file in each directory.

###################################

# Show treeview of agent

###################################

- powershell: |

tree "$(Pipeline.Workspace)" /F

displayName: '2 Treeview of Pipeline.Workspace'

On a Windows agent it looks a bit crapy, but on an Ubuntu agent it is much better (see first screenshot above).

|

| Treeview of Pipeline.Workspace on Windows agent |

A useful place for this tree command is for example right after a checkout of the repository. Now you know where for example your other YAML file is located so you can call in in a next task.

|

| YAML pipeline |

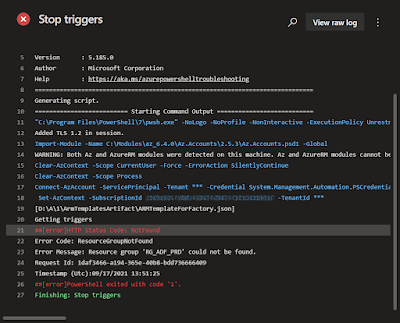

Or use it after the build of an artifact to see the result. And now that you now where the files are located you could even show the content of a (text)file with an additional line of code.

###################################

# Show treeview of agent

###################################

- powershell: |

tree "$(Pipeline.Workspace)" /F

Write-host "--------------------ARMTemplateForFactory--------------------"

Get-Content -Path $(Pipeline.Workspace)/s/CICD/packages/ArmTemplateOutput/ARMTemplateForFactory.json

Write-host "-------------------------------------------------------------"

displayName: '7 Treeview of Pipeline.Workspace and ArmTemplateOutput content '

Note: For large files it is wise to limit the number of rows to read with an additional parameter for the Get-Content: -TotalCount 25Conclusion

In this post you learned how a little PowerShell can help you debugging your YAML pipelines. Don't forget to comment out the extra code when you go to production with your pipeline. Please share your debug tips in the comments below.

In this post you learned how a little PowerShell can help you debugging your YAML pipelines. Don't forget to comment out the extra code when you go to production with your pipeline. Please share your debug tips in the comments below.

Thx to colleague Walter ter Maten for helping!