To save some money on my Azure bill, I want to downscale the vCore or the number of DTUs for my Azure SQL Databases when we don't need a high performance and then upscale them again when for example the ETL starts. How do you arrange that in Azure?

|

| Change the Tier of your SQL Azure DB |

Solution

A few years ago we showed you how to do this with some PowerShell code in an Azure Automation Runbook with the AzureRM modules. However these old modules will be be out of support by the end of 2020. So now it is time to change those scripts.

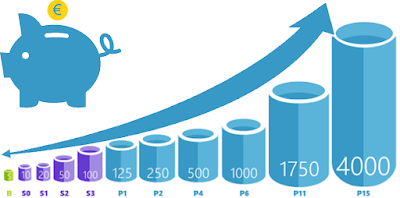

The script works for both Database Transaction Units (DTU) and Virtuals Cores (vCore). DTU uses a Service Tier (Basic, Standard and Premium) and then the actual Performance Level (B, S0 to S12, P1 to P15) which indicates the number of used DTUs. More DTUs means more performance and of course more costs.

The newer vCore model is now recommended by Microsoft. It is a bit more flexible and transparent: it actually shows you the processor, memory and IOPS. But the lowest vCore price is much more expensive than the lowest DTU price. So for development and testing purposes you probably still want to use DTU. The vCore model also uses a Service Tier (GeneralPurpose, BusinessCritical) and then number of vCores combined with the processor (e.x. GP_Gen4_1 or GP_Gen5_2).

Use the Microsoft documentation to compare both models, but a little comparison. S3 with 100 DTUs is about the same as General Purpose with 1 vCore. P1 with 125 DTUs is about the same as Premium with 1 vCore.

1) Create Automation Account

First we need to create an Automation Account. If you already have one with the Run As Account enabled then you can skip this step.

- Go to the Azure portal and create a new resource

- Search for automation

- Select Automation Account

- Choose a useful name for the Automation Account

- Select your Subscription, Resource Group and the Region

- For this example we will use the Azure Run As account. So make sure to enable it and then click on the Create button.

|

| Create Azure Automation Account |

2) Add Module Az.Sql

Before we start writing some code we need to add a PowerShell module called Az.Sql. This module contains methods we need in our code to upscale or downscale the Azure SQL database, but first we need to add Az.Accounts because Az.Sql depends on it.

If you forget this step you will get error messages while running your code that state that some of your commands are not recognized:

Get-AzSqlDatabase : The term 'Get-AzSqlDatabase' is not recognized as the name of a cmdlet, function, script file, or operable program. Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

- Go to the newly created Azure Automation Account

- Go to Modules in the left menu

- Click on the Browse Gallery button

- Search for Az.Accounts

- Click on Az.Accounts in the result and import this module

- Also search for Az.Sql (but wait until Az.Accounts is actually imported)

- Click on Az.Sql in the result and import this module

|

| Adding a new module to your Automation Account |

Note: if you are using an existing Automation Account then you probably already added Az.Accounts.

3) Create Runbook

Now we are ready to create a runbook in the Azure Automation Account and start writing some PowerShell code.

- Go back to the overview page of your newly created Azure Automation Account

- Click on Runbooks in the left menu

- Click on the + Create a runbook button to create a new Runbook

- Enter a descriptive name for the Runbook like UpDownScaleDb

- Select PowerShell as Runbook type

- Optionally add a description and click on the Create button

|

| Create a Runbook |

4) Edit Runbook code

Next edit the new Runbook if it wasn't already opened by the previous step. Copy the code below and paste it in the editor. Then study the code and its comments to understand the code that can upscale and downscale your Azure SQL DB. It exists of five parts:

- Parameters

- Log in to Azure

- Get current pricing tier

- Upscale or downscale

- Logging

To up or downscale the script needs five parameters. The first three parameters 'ResourceGroupName', ServerName'' and 'DatabaseName' are to indicate for which database the DTU or vCore needs to be changed. The fourth and fifth parameters 'Edition' and 'PricingTier' are used to up or downscale your DB. There are a couple of parameter validations which you could extend to make your script even more monkey proof.

Note: if you want to call this script via Azure Data Factory (ADF), then you need to change the parameter part. You can find all the details to do that in our blog posts about Runbook parameters and ADF and using the Webhook activity in ADF. If this is your first time creating a runbook then first try the standard script and then adjust it to your needs.

Log in to Azure

This is a standard piece of code that you will see in all of our examples. Please read our blog post about the Azure Run as Account for more detailed information.

Get current pricing tier

This piece of code tests whether it can find the database and gets its current pricing tier. It stores the current pricing tier and uses it later on for an extra check when upscaling or downscaling the DB.

Upscale or downscale

This is the actual code for changing the database pricing tier. There is an extra check to compare the current pricing tier with the new pricing tier. It now throws an error when they are equal. You could change that to write an warning instead of an error.

Note: you could also send emails to notify you of any errors

Logging

The last piece of code is for logging purposes. It shows you that it successfully changed pricing tier of the DB (or DWH) and how long it took to accomplish that.

# PowerShell code

########################################################

# Parameters

########################################################

[CmdletBinding()]

param(

[Parameter(Mandatory=$True,Position=0)]

[ValidateLength(1,100)]

[string]$ResourceGroupName,

[Parameter(Mandatory=$True,Position=1)]

[ValidateLength(1,100)]

[string]$ServerName,

[Parameter(Mandatory=$True,Position=2)]

[ValidateLength(1,100)]

[string]$DatabaseName,

[Parameter(Mandatory=$False,Position=3)]

[ValidateLength(1,100)]

[string]$Edition,

[Parameter(Mandatory=$False,Position=4)]

[ValidateLength(1,100)]

[string]$PricingTier

)

# Keep track of time

$StartDate=(GET-DATE)

########################################################

# Log in to Azure with AZ (standard code)

########################################################

Write-Verbose -Message 'Connecting to Azure'

# Name of the Azure Run As connection

$ConnectionName = 'AzureRunAsConnection'

try

{

# Get the connection properties

$ServicePrincipalConnection = Get-AutomationConnection -Name $ConnectionName

'Log in to Azure...'

$null = Connect-AzAccount `

-ServicePrincipal `

-TenantId $ServicePrincipalConnection.TenantId `

-ApplicationId $ServicePrincipalConnection.ApplicationId `

-CertificateThumbprint $ServicePrincipalConnection.CertificateThumbprint

}

catch

{

if (!$ServicePrincipalConnection)

{

# You forgot to turn on 'Create Azure Run As account'

$ErrorMessage = "Connection $ConnectionName not found."

throw $ErrorMessage

}

else

{

# Something else went wrong

Write-Error -Message $_.Exception.Message

throw $_.Exception

}

}

########################################################

########################################################

# Getting the database for testing and logging purposes

########################################################

$MyAzureSqlDatabase = Get-AzSqlDatabase -ResourceGroupName $ResourceGroupName -ServerName $ServerName -DatabaseName $DatabaseName

if (!$MyAzureSqlDatabase)

{

Write-Error "$($ServerName)\$($DatabaseName) not found in $($ResourceGroupName)"

return

}

else

{

Write-Output "Current pricing tier of $($ServerName)\$($DatabaseName): $($MyAzureSqlDatabase.Edition) - $($MyAzureSqlDatabase.CurrentServiceObjectiveName)"

}

########################################################

# Set Pricing Tier Database

########################################################

# Check for incompatible actions

if ($MyAzureSqlDatabase.Edition -eq $Edition -And $MyAzureSqlDatabase.CurrentServiceObjectiveName -eq $PricingTier)

{

Write-Error "Cannot change pricing tier of $($ServerName)\$($DatabaseName) because the new pricing tier is equal to current pricing tier"

return

}

else

{

Write-Output "Changing pricing tier to $($Edition) - $($PricingTier)"

$null = Set-AzSqlDatabase -DatabaseName $DatabaseName -ServerName $ServerName -ResourceGroupName $ResourceGroupName -Edition $Edition -RequestedServiceObjectiveName $PricingTier

}

########################################################

# Show when finished

########################################################

$Duration = NEW-TIMESPAN –Start $StartDate –End (GET-DATE)

Write-Output "Done in $([int]$Duration.TotalMinutes) minute(s) and $([int]$Duration.Seconds) second(s)"

Note: once your database grows to a certain size you can not downscale it to certain levels because there is a max database size for certain pricing tiers. For example if your DB is larger than 250 GB then you can note downscale it below S3. There are size checks that you could add to avoid errors.

5) Testing

Testing the functionality of your code can be done in the runbook editor. Click on the Test pane button above your script. After that you need to fill in the five parameters and hit the Start button to execute the script.

|

| Testing a Powershell script |

6) Scheduling Runbook

To schedule your runbook in Azure Automation you first need to publish it via the Runbook editor. After it has been published you can add a schedule to this runbook.

- Edit the script in the runbook editor

- Click on publish (the editor will close and you will be redirected to the overview page)

- In the overview page click on Link to schedule

- In the Schedule menu you can select an existing schedule or create a new one

- In the Parameter menu you can provide the value for the five parameters

|

| Add schedule to a runbook |

Note: If you have multiple Azure SQL Database that you all want to up or downscale on the same time then you have a slight problem because you cannot reuse a schedule for the same runbook multiple times with different parameters (please upvote or add a comment). Workarounds:

- create multiple identical schedules (ugly but works)

- do everything in one big script (less flexible but works)

|

| Log of runbook executions |

|

| Details of single execution |

Summary

In this blog post you learned how to schedule an up- or scale of your Azure SQL DB (or DWH) to save money in case you don't need a high performace 24 hours a day. Scheduling is done in Azure Automation, but with some minor changes you can also do that via an ADF pipeline.

The Powershell scripting gives you the most flexibility for example to add additional tests and notifications, but for those who don't like scripting: in a next blog post we will show you how to accomplish this with TSQL code only. You can either use that for an on-the-fly up- or downscale or use it in a Store Procedure Activity in Azure Data Factory.