Microsoft recently introduced Power BI dataflows for self-service ETL or data preparation. It uses an Azure Data Lake Storage (ADLS), which is only visible to Power BI, to store the metadata and result of the dataflows. However, you can also bring your own storage account. How does that work and why would you want that?

|

| Bring Your Own Storage in preview |

Solution

As we mentioned earlier in our previous post, Power BI dataflows can be seen as a self-service data preparation tool. It is easy accessible, so that other people in your organization (besides IT) can get started with transforming and maintaining the data. Nowadays there are often several people / business analysts in a department who are maintaining Excel files. With Power BI dataflows you can centralize this process and contribute to the ideology of "one version of the truth".

When you bring your own ADLS Gen2 storage account (StorageV2) for Power BI dataflows, other services like Azure Data Factory or Azure Databricks could use that same data. This makes it possible to do light weight data preparations with an user-friendly tool for corporate data warehousing or data science. However Power BI dataflows does not (yet?) replace SSIS, Azure Data Factory or any other ETL tool. They could even write back data to the data lake to create 'external' dataflows that are not maintained in Power BI.

Configure and connect to your own storage account

First you have to create an ADLS Gen2 storage account. Make sure the storage account is on the same region as the Power BI tenant. Click here for the documentation of Microsoft that explains step by step how to create such account.

Next, execute the following steps to make a connection with your own storage account. Note that the last step is a one-time action that cannot be changed afterwards.

- Add "Reader" role to your storage account for the Power BI Service.

- Create Blob Container to save the dataflow content.

- Allow Power BI and Power Query to access the content in the Blob Container.

- Connect your Azure Data Lake Storage Gen2 to Power BI

It requires the following permissions to make a connection:

- Role "Owner" in the Azure Subscription (service administrator / classic administrator) to add an ADLS Gen2 storage account. This is also required to give the Power BI Service access to the storage account and Blob Container.

- Global Administrator in O365 or Azure Active Directory to connect your ADLS Gen2 storage account with dataflows. This has to be done in the Power BI admin portal. The role Power BI Service Administrator is not sufficient to perform those actions.

Result

To actual store the dataflow definition and the related data files in your ADLS Gen2 storage account, you must create a new Power BI app workspace or update an existing one. In case of an update, make sure you do not already have dataflows stored in the workspace. Otherwise you cannot change this.

In this case we created a new workspace. Turn on Dataflow storage (preview) under "Advanced" settings. In this case we built the same (simple) dataflow as our previous post. Save your dataflow and click "Refresh".

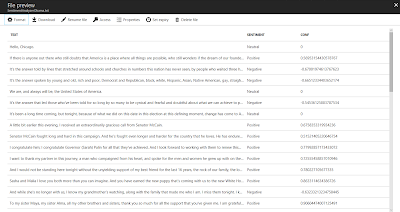

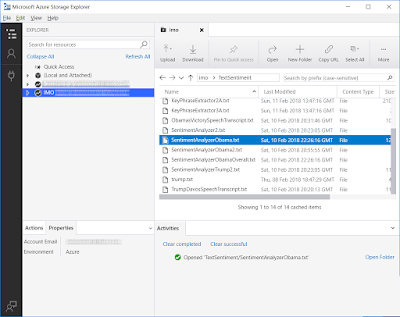

Azure Storage Explorer

When the dataflow is refreshed, go to the Blob container you created earlier for your ADLS Gen2 storage account. Here you will find the definition (source code) of the dataflow and the output. Note that the content of the ADLS Gen2 storage account is only visible in Azure Storage Explorer. Click here to download.

|

| Azure Data Explorer - Result in own ADLS Gen2 storage account |

And now you can choose per workspace whether you want to use this new Data Lake. This only works for the new workspaces, that are at the moment also still in preview.

|

| Power BI Workspace settings |

Common errors

When you try to connect to your own storage account in the admin portal of the Power BI Service, you can get several errors.

You must have global administrator permissions

Only Global Administrators in Office 365 or Azure Active Directory are administrators in Power BI and therefore able to connect to the storage account. Click here for more information about administrator roles in Power BI.

There was a problem accessing your dataflow storage account

After creating your storage account , it can take up to 30 minutes to make a connection. Also make sure you avoid spelling mistakes.

This occurs for example when you are trying to make a connection with the ADLS storage account in the Power BI Service with an account outside the organization. In this case, the organization (subscription) is where the storage account is created.

Conclusion

In this post we showed you how to use your own Azure Data Lake Storage account instead of the default provided by Power BI using dataflows. This new feature has several possible use cases. For example:

- Data preparation for 'corporate' data warehousing by a business user with a user-friendly tool

- Data preparation for 'corporate' data science by a business user with a user-friendly tool

- Creating 'external' dataflows for Power BI with Azure Services like Data Factory or Databricks

- Using data from other CDM-compliant applications like Dynamics 365 and Office 365

We hope Microsoft will make it a bit easier to bring your own storage, because at the moment there are a lot of steps to take and you need a lot of rights to do it. This discourages to arrange this powerful option.

We also expect some more admin capabilities in Power BI, because at this moment you cannot change your dataflow storage once Power BI is connected to your own ADLS account (so be very careful). And for larger corporations one ADLS account is probably not enough. It is also expected that the integration and collaboration with other Azure services will be improved so that you are even more flexible in choosing the services in your BI landscape.