I need to use some passwords and keys in my Databricks notebook, but for security reasons I don't want to store them in the notebook. How do I prevent storing sensitive data in Databricks?

|

| Using Azure Key Vault for Azure Databricks |

Solution

Let's say you want to connect to an Azure SQL Database with SQL Authentication or an Azure Blob Storage container with an Access key in Databricks. Instead of storing the password or key in the notebook in plain text, we will store it in an Azure Key Vault as a secret. With an extra line of code we will retrieve the secret and use its value for the connection.

The example below will show all individual steps in detail including creating an Azure Key Vault, but assumes you already have an Azure Databricks notebook and a cluster to run its code. The steps to give Databricks access to the Key Vault slightly deviate from Azure Data Factory or Azure Automation Runbook, because the access policy is set from within Databricks itself.

1) Create Key Vault

First step is creating a key vault. If you already have one then you can skip this step.

- Go to the Azure portal and create a new resource

- Search for key vault

- Select Key Vault and click on Create

- Select your Subscription and Resource Group

- Choose a useful name for the Key Vault

- Select your Region (the same as your other resources)

- And choose the Pricing tier. We will use Standard for this demo

|

| Creating a new Key Vault |

2) Add Secret

Now that we have a Key Vault we can add the password from the SQL Server user or the key from the Azure Storage account. The Key Vault stores three types of items: Secrets, Keys and Certificates. For passwords, account keys or connectionstrings you need the Secret.

- Go to the newly created Azure Key Vault

- Go to Secrets in the left menu

- Click on the Generate/Import button to create a new secret

- Choose Manual in the upload options

- Enter a recognizable and descriptive name. You will later on use this name in Databricks

- Next step is to add the secret value which we will retrieve in Databricks

- Keep Content type Empty and don't use the activation or expiration date for this example

- Make sure the secret is enabled and then click on the Create button

|

| Adding a new secret to Azure Key Vault |

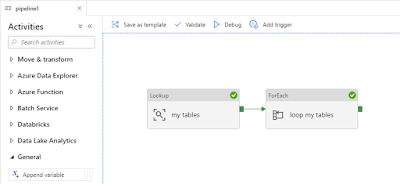

3) Create Secret Scope

Instead of adding Databricks via the Access policies in the Key Vault we will use a special page in the Databricks workspace editor which will create an Application in the Azure Active Directory and give that application access within the Key Vault. At the moment of writing this feature is still a Public Preview feature and therefore layout could still change and it will probably become a menu item when in GA.

- Go to your Azure Databricks overview page in the Azure portal

- Click on the Launch workspace button in the middle (a new tab will opened)

- Change the URL of the new tab by adding '#secrets/createScope' after the URL

Example

https://westeurope.azuredatabricks.net/?o=1234567890123456

Becomes

https://westeurope.azuredatabricks.net/?o=1234567890123456#secrets/createScope

|

| Find 'Create Secret Scope' form |

Next step is to fill in the 'Create Secret Scope' form. This will connect Databricks to the Key Vault.

- Fill in the name of your secret scope. It should be unique within the workspace and will be used in code to retrieve the secret from Key Vault.

- The Manage Principal for the premium tier can either be Creator (secret scope only for you) or All Users (secret scope for all users within the workspace). For the standard tier you can only choose All Users.

- The DNS name is the URL of your Key Vault which can be found on the Overview page of your Azure Key Vault which looks like: https://bitools.vault.azure.net/

- The Resource ID is a path that points to your Azure Key Vault. Within the following path replace the three parts within the brackets:

/subscriptions/[1.your-subscription]/resourcegroups/[2.resourcegroup_of_keyvault]/providers/ Microsoft.KeyVault/vaults/[3.name_of_keyvault]

- The guid of your subscription

- The name of the resource group that hosts your Key Vault

- The name of your Key Vault

Tip: if you go to your Key Vault in the Azure portal then this path is part of the URL which you could copy

- Click on the Create button and when creation has finished click on the Ok button

|

| Create Secret Scope |

4) Verify Access policies

Next you want to verify the rights of Databricks in the Key Vault and probably restrict some options because by default it gets a lot of permissions.

- Go to your Key Vault in the Azure Portal

- Go to Access policies in the left menu

- Locate the application with Databricks in its name

- Check which permissions you need. When using secrets only, the Get and List for secrets is probably enough.

|

| Verify permissions of Databricks in Azure Key Vault |

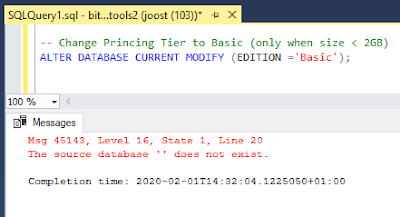

5) Scala code

Now it is time to retrieve the secrets from the Key Vault in your notebook with Scala code (Python code in next step). First code is for when you forgot the name of your secret scope or want to know which ones are available in your workspace.

// Scala code

// Get list of all scopes

val mysecrets = dbutils.secrets.listScopes()

// Loop through list

mysecrets.foreach { println }

|

| Scala code to get secret scopes |

If you want to get the value of one secret you can execute the following code. Note that the value will not be shown in your notebook execution result

// Scala code dbutils.secrets.get(scope = "bitools_secrets", key = "blobkey")

|

| Scale code to retrieve secret from the Azure Key Vault |

And if you want to use that code to retrieve the key from your blob storage account and get a list of files you can combine it in the following code. The name of the storage account is 'bitools2'

// Scala code

dbutils.fs.mount(

source = "wasbs://sensordata@bitools2.blob.core.windows.net",

mountPoint = "/mnt/bitools2",

extraConfigs = Map("fs.azure.account.key.bitools2.blob.core.windows.net" -> dbutils.secrets.get(scope = "bitools_secrets", key = "blobkey")))

|

| Scale code to mount Storage and get list of files |

6) Python code

Now it is time to retrieve the secrets from the Key Vault in your notebook with Python code. First code is for when you forgot the name of your secret scope or want to know which ones are available in your workspace.

# Python code # Get list of all scopes mysecrets = dbutils.secrets.listScopes() # Loop through list for secret in mysecrets: print(secret.name)

|

| Python code to get secret scopes |

If you want to get the value of one secret you can execute the following code. Note that the value will not be shown in your notebook execution result

# Python code dbutils.secrets.get(scope = "bitools_secrets", key = "blobkey")

|

| Python code to retrieve secret from the Azure Key Vault |

And if you want to use that code to retrieve the key from your blob storage account and get a list of files you can combine it in the following code. The name of the storage account is 'bitools2'

# Python code

dbutils.fs.mount(

source = "wasbs://sensordata@bitools2.blob.core.windows.net",

mount_point = "/mnt/bitools2a",

extra_configs = {"fs.azure.account.key.bitools2.blob.core.windows.net":dbutils.secrets.get(scope = "bitools_secrets", key = "blobkey")})

|

| Python code to mount Storage and get list of files |

Conclusion

In this post you learned how to store sensitive data for your data preparation in databricks the right way by creating a Key Vault and use it in your notebook. The feature is still in public preview which will probably mean the layout will slightly change before going to GA, but the features will most likely stay the same. Another point of attention is that you don't have any influence on the name of the Databricks application in de AAD and the default permissions in the Key Vault.

In previous posts we also showed you how to use the same Key Vault in an Azure Data Factory and an Azure Automation Runbook to avoid hardcoded passwords and keys. In a future post we will show you how to use it in other tools like Azure Functions.